Deep Learning

What is deep learning?

Deep Learning is a subsection of machine learning connected with algorithms motivated by the function & structure of the brain called artificial neural networks.

As the world is progressing massively in the technological field and this era is based with nothing but automation, the scientist thought of mechanisms to teach the computer the way a human brain does. The human brain uses neurons to compute any raw data provided to it, which then gets converted into usable information. The same set of processes is mimicked to be performed by the machines, to learn and get trained to act as a human brain – basically learn stuff and perform accordingly. In this regard, deep learning (DL) has played a giant role in getting this theory into practice. DL is a field of science which implicitly makes the system learn and improve with performance and experience. It creates models which may be capable of various tasks such as image recognition, text prediction or video classification; the way it performs tasks is the same as the human brain’s neurons work and identifies things.

The field may be associated with various automations by text classification, image identification, detecting objects and making decisions based on previously trained data. The training here is done in the absence of any human intervention, and can be performed on both unsupervised and unstructured data. The technique uses Artificial intelligence (AI) core ideas and neural networks as the basic components.

Deep learning and Neural Networks.

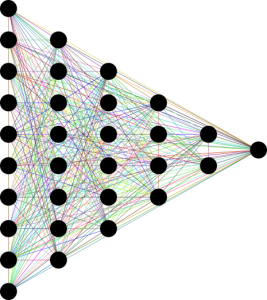

The core asset behind making a machine mimic the human working neurons is by creating a Xerox of them, in the form of fully connected dense neural networks. These networks are designed in a specific way to work just as the biological human neural network works. These networks best work with certain use cases such as pattern recognition, anomaly detection, time series prediction and signal processing. Basically, these networks are trained to predict future inputs after a set of training data has been trained, making it possible for the network to use machine learning for tasks such as object identification.

The human brain has a network of thousands of millions of nodes interconnected with each other to process data and act as a single combined neural network. In deep learning, as the number of features and attribute scale rises, the nets get deeper and possess a much higher number of hidden layers; thus can be called as a feed forward neural network (data flow direction is one way). There are numerous kinds of neural networks; such as Deep Neural Network (DNN), Convolutional Neural Network (CNN), Recurrent Neural Network (RNN) and Artificial Neural Network (ANN). All these networks can be used separately or combined for much higher accuracy rates. These types of models are extremely beneficial for critical fields such as medicine and disease prediction. Deep learning has played a humongous role in the past in the medical field, and is yet to prosper with the high rates of accuracy and automation the deep learning models have been providing. Some ways the DL has been helping the medical field is as follows:

- Deep Neural Network (DNN)

The type of model which uses neural networks, as well as many layers to compute any problem. Many hidden layers are incorporated in this model, where each layer satisfies one attribute. Two types of signals are used here, input and output along with activation function, bias and perception model. It is basically a multiple perceptron model. The model is usually bidirectional, which satisfies both forward and backward pass. DLL has played a giant role in the medical field alone, including the identification of the infectious fever, medical recommender systems, assisting in medical image analysis, medical diagnosis, treating regimes on medical registry data and facial and DNA recognition etc.

- Convolutional Neural Network (CNN)

CNN is a type of neural network which detects text recognition, and mainly does the operation of classification. It may be used where a model flats out a 2d array into 1d, or where text classification is required from a paragraph mainly where context can’t be neglected and has to be carried forward for the prediction of the next work in any sequence. The ConvNet is a perfect model to capture the Temporal and Spatial dependencies, using numerous relative filters. This model depends on the shifting of the stride, the kernel, which parses to complete the width of the text entered. The benefits the model has given in medicine are vast, including where data is required using collaborative filtering, medical image analysis, facial expression intensity estimation.

- Recurrent Neural Network (RNN)

This type of neural network is the most beneficial where Freud Forward doesn’t come handy. For situations where classification and transformation is done, mainly where sequential data is the input. This model can be represented as a series of data points. The areas in medicine which have shown positive responses are the models where collaborative filtering is used in accordance to it, medical event detection, classifying relations amongst medical data, and critical events such as predicting life expectancy of a patient.

Neural Network Working Model

Incorporating a new way of identification by creating deep models which is generated from training data descriptions. The idea is reliant upon the nature of all problems that persist in the world. Each problem in the world can be labeled as a problem of training and prediction. In this project, the proposed model would intake a data set, train out all the identifiable meaningful units, and build a freed forward neural network with dense layers. Furthermore enhancing the accuracy, a step would be carried out which takes all the inputs and multiplies it with all the weights, and adds a bias for better accuracy. Activation functions are then applied to the models to enhance the accuracy even further and improve the learning rate.

The type of model above explained is a perceptron model which uses input weights, neurons, perceptron, activation function and a bias. Initially, the value of weights is set to random numbers and is adjusted accordingly. As it’s a deep neural network, it contains multiple layers. The first bottom layer is the one which will be fed with the input data (input layer), and the further layers will be hidden ones (one layer for each feature) which will be processed and computed using activation functions and the data outputs at the output layer, completely altered. Training uses both forward and back propagation with the weights constantly adjusted until the training data is ready for prediction or identification.

This model brings various implementations of the said system and technology to limelight and proposes to take an unsupervised data and statistically measured leap into the realm of bridging the gap between communication of a human brain and a computer system, by practically giving the system a metaphysical neural networked brain.